Objective Structured Clinical Evaluation (OSCE) is an excellent method to evaluate student's abilities, but there are no previous reports implementing it in dermatology.

ObjectivesTo determine the feasibility of implementation of a dermatology OSCE in the medical school.

MethodsFive stations with standardized patients and image-based assessment were designed. A specific checklist was elaborated in each station with different items which evaluated one competency and were classified into five groups (medical history, physical examination, technical skills, case management and prevention).

ResultsA total of 28 students were tested. Twenty-five of them (83.3%) passed the exam globally. Concerning each group of items tested: medical interrogation had a mean score of 71.0; physical examination had a mean score of 63.0; management had a mean score of 58.0; and prevention had a mean score of 58.0 points. The highest results were obtained in interpersonal skills items with 91.8 points.

LimitationsTesting a small sample of voluntary students may hinder generalization of our study.

ConclusionsOSCE is an useful tool for assessing clinical skills in dermatology and it is possible to carry it out. Our experience enhances that medical school curriculum needs to establish OSCE as an assessment tool in dermatology.

La evaluación clínica objetiva y estructurada (ECOE) es un excelente método para evaluar las capacidades clínicas de los estudiantes, pero no existen estudios previos sobre su aplicación en la rama de la dermatología.

ObjetivosDeterminar la viabilidad de la puesta en práctica de una ECOE sobre dermatología en el Grado de Medicina.

MétodosSe diseñaron 5 estaciones con pacientes estandarizados y evaluación basada en imágenes. Se elaboró una lista de evaluación específica para cada estación clínica con distintos puntos que valoraban competencias clínicas clasificadas en 5 grupos (historia clínica, exploración física, habilidades técnicas, tratamiento y prevención).

ResultadosSe examinaron un total de 28 estudiantes. Veinticinco (83,3%) aprobaron el examen. En lo que respecta a cada grupo de puntos a evaluar analizados, la historia clínica tuvo una puntuación media de 63,0, el tratamiento una puntuación media de 58,0 y la prevención tuvo una puntuación media de 58,0 puntos. Los resultados más altos se obtuvieron en el apartado de habilidades interpersonales, con 91,8 puntos.

LimitacionesLa evaluación de una muestra pequeña de estudiantes voluntarios podría impedir la generalización de nuestro estudio.

ConclusionesLa ECOE es una herramienta útil para evaluar las habilidades clínicas en dermatología y se puede llevar a cabo en el Grado de Medicina. Nuestra experiencia pone de manifiesto que el plan de estudios de medicina debe incluir la ECOE como método de evaluación en dermatología.

The European Higher Education Area (EHEA) has introduced important changes in Spanish medical education. Clinical practice is no more considered only as a complement to medical theory, where the student had a passive learning based on observation and listening. With the implantation of the EHEA, also known as Bologna Process, undergraduate medical students spend more time doing clinical practice and they know what competencies should be acquired during their clerkships.1,2

Traditionally, assessment of clinical practice consisted of written and oral examinations, most of them not standardized. Although direct observation of performance is the most effective evaluation method, many times it is not possible to do so because of problems in standard implementations. In the last years, multiple assessment methods have been used for evaluating clinical competencies. Analysis of critical incidents, Objective Structured Clinical Examination (OSCE), videotapes and simulation have been described as good assessment methods, separately or in combination.3

Simulation has been used in dermatology to learn and evaluate clinical competencies such as detection of cutaneous malignancies or acquiring procedural skills in dermatologic surgery.4,5 Recognition of melanoma has also been evaluated by simulation with standardized patients,6 using temporary tattoos and moulages which have been validated as good teaching tools.7,8 To assess multiple clinical competencies Harden et al. designed OSCE in 1975.9 In this, students are evaluated in a series of stations where they are asked to perform a procedure (take a clinical history, conduct a physical examination and/or interpret complementary tests).

OSCE has been reported as a powerful competence assessment method in healthcare professionals.10–12 In our institution, medical students take an OSCE as a final test of clinical competencies at the end of their sixth year. The increased importance of assessing clinical practice also involves dermatology clerkship and new evaluation methods must be implemented. The objective of the study was to perform the first experience in developing and applying a dermatological OSCE to sixth grade medical students.

Materials and methodsThe present study was undertaken using a quantitative and qualitative approach, and took place in the Faculty of Medicine of University of Alcalá. The 4 steps recommended by Harden and Gleeson were followed to develop the OSCE.13

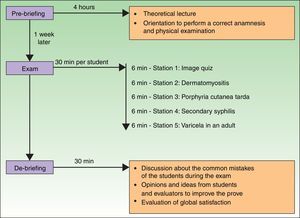

PlanWe selected the most important issues to design the different stations. In Spain, medical school graduates take a national exam in order to enter to postgraduate training in a specialty. According to it, the most asked issues in dermatology in the last 10 years were: cutaneous cancer (26.8%), cutaneous manifestations of systemic disease (23.2%), papulosquamous dermatoses (7.3%), vesiculobullous diseases (4.9%), cutaneous infections (4.9%) and others. Based on these data, we decided to design 5 stations, four of them as simulated situations using standardized patients and one using an image-based assessment. The clinical simulation stations we defined were secondary syphilis, dermatomyositis, porphyria cutanea tarda, and varicella in an adult. We also designed an image-based station (Fig. 1).

Each station had different items which evaluated one competency. Those items were classified into four groups (medical interrogation, physical examination, case management and prevention) and could be scored by an examiner on a binary checklist (YES/NO). We avoided items whose score could depend on the examiner interpretation, such as sentences which included adjectives or adverbs for qualification (“well done”, “performed correctly”). All the stations with standardized patients had an extra group of items to assess the verbal and nonverbal communication, empathy and efficient transmittal of information. The highest possible score for each checklist was 100. As an example, the checklist of “secondary syphilis” is represented in Table 1.

Checklist of secondary syphilis station. Each item was evaluated during the development of the exam. Scores were achieved if the student completed the item and the maximum score in every station was 100.

| Checklist: “secondary syphilis” | Yes | No | Score |

|---|---|---|---|

| Medical interrogation (student asks for:) | |||

| 1. Skin lesions features: distribution, clinical onset, change in their morphology, changes in their distribution or configuration, symptoms such as pain or itching (at least two). | 5 | ||

| 2. Personal history of unsafe sex. | 15 | ||

| 3. New drug intake or use of any topical products (at least one). | 10 | ||

| 4. Presence of fever, fatigue, muscular weakness, joint pain (at least one). | 5 | ||

| Physical examination | |||

| 1 Asks the patient to undress in order to examine the entire body surface. | 5 | ||

| 2. Genitalia examination. | 10 | ||

| Case management | |||

| 1. Runs syphilis serology tests. | 15 | ||

| 2. Runs other serology tests in order to discard other STIs (HIV, HBV). | 10 | ||

| Prevention | |||

| 1. Recommends using barrier contraceptive methods during sexual practice. | 15 | ||

| 2. Recommends warning last sexual partners about their risk of infection. | 10 | ||

| Interpersonal skills | |||

| 1. Good general aspect, hygiene, correct posture. | 1 | ||

| 2. Adequate listening, attention without interruptions, eye-contact while speaking. | 1 | ||

| 3. Kindness, good reception, smiling. | 1 | ||

| 4. Respectful, without criticisms or derogatory judgements. | 1 | ||

| 5. Composure, with emotional control. | 1 | ||

| 6. Optimism, trying to encourage the patient. | 1 | ||

| 7. Contact: physical contact during examination is kind and careful. | 1 | ||

| 8. Shows interest in the opinions, beliefs, values, worries or emotions of the patient. | 1 | ||

| 9. Intelligible speech, being clearly understood by the patient during all the interview. | 1 | ||

| 10. Empathy. Sympathizes with the intense emotions of the patient (pain, anxiety, joy, …). | 1 | ||

| Total | 100 | ||

All the stations were reviewed by the teachers of the Teaching Support Center of University of Alcala, who have years of experience in planning and developing of the final grade OSCE, and little changes were proposed. Dermatology residents were trained to act as standardized patients (4 of them) and be examiners in the stations (5 of them). Skin disorders were shown as digital pictures with electronic devices or were created with makeup on the standardized patient when it was possible. Taking the dermatological OSCE was optional for all sixth grade students. We offered 30 places, in order to perform the OSCE in one afternoon.

Pre-briefingOne week before the dermatological OSCE, the students took a test with fifteen image-based questions about several dermatologic cases. Later, they received a lecture that consisted of clinical cases and a review of the most important aspects of common dermatologic diseases. They also received a standard orientation which emphasized the importance of an appropriate history and a physical examination correctly performed in dermatology. Students had the opportunity of knowing the scenarios in order to become familiar with the rooms and the material. All rooms had one or two cameras with microphones in order to evaluate students performance without interfering with it (Fig. 2).

ExamThe 30 students were called in groups of 5 people. Before the exam they received basic instructions and the importance of focusing on the objectives in each station was emphasized. Students rotated through 5 stations and they could spend a maximum of 6min in each station. One advantage of the rotation approach is that several students can be assessed at the same time. Students could interact with the standardized patients as much as they considered, but examiners were trained in order not to interfere in the development of the test. In the image-based station five cutaneous lesions were showed with printed good quality images and a small patient's history was attached. Students were required to determine if each lesion was benign or malignant and to give a short list of possible specific diagnoses. A group of 30 students were supposed to be examined in one afternoon.

De-briefingAfter the exam, students and examiners discussed about the common mistakes and proposed corrections for the students and the exam. Students and examiners had the opportunity of expressing their opinions and ideas on how OSCE could be improved. Students also were able to observe some of their performances that had been recorded. Finally, they were asked to do the same test they went through one week before during the pre-briefing.

ResultsA total of 28 students were tested in one afternoon. From the original group of 30 students, two of them were not included in the analysis because they were absent. Of the remaining students, evaluation of the theoretical test of the pre-briefing revealed a mean score of 45.5 and 15 students (53.6%) did not passed the exam. Evaluation of the checklists revealed that 25 students (83.3%) passed the exam globally, with a mean score of 58.8 (range 43.5–83.6). Regarding each station: “image quiz”, “dermatomyositis”, “porphyria cutanea tarda”, “secondary syphilis” and “varicella in an adult”; the number of students who passed each test were 23 (82.1%), 20 (71.4%), 20 (71.4%), 28 (100%) and 22 (78.6%) respectively. In addition, their mean score was 63.0, 58.0, 79.1, 63.4 and 64.5 points. Medical interrogation was a rather successful item with a mean score of 71.1. Physical examination had worse results with a mean score of 62.8, but great differences were detected between stations (“secondary syphilis” was the highest rated with 98.2 points) and “porphyria cutanea tarda” the worst one with a punctuation of 31.0. Case management had an average result of 63.0, also with significant differences between stations (“syphilis” being the highest one with 84.0 points and the lowest one being “dermatomyositis” with 38 points). Prevention was a secondary item with few parts in each station, having a mean score of 51.8 points. Concerning to each group of items tested the highest results were obtained in interpersonal skills items, with average scores of 93.1 points (range 88–99, Table 2). We detected no statistically significant difference in the outcomes between students’ sex or age.

Mean scores and range of students’ performance for each group of items. Maximum score was 100 points.

| Score and range of medical interrogation | Score and range of physical examination | Score and range of case management | Score and range of prevention | Score and range of interpersonal skills | |

|---|---|---|---|---|---|

| Imaged-based station | NA | 50.9 (16.7–100) | 80.4 (0–100) | 57.1 (42.9–71.4) | NA |

| Dermatomyositis | 85.7 (71.4–100) | 56.0 (64.2–96.4) | 37.5 (14.3–89.3) | NA | 91.1 (82.1–100) |

| Secondary syphilis | 58.0 (10.7–100) | 98.2 (96.4–100) | 83.9 (71.4–96.4) | 76.8 (75–78.6) | 93.9 (60.7–100) |

| Porphyria cutánea tarda | 62.1 (10.7–100) | 31.0 (17.9–53.6) | 64.3 (50–78.6) | NA | 98.9 (92.9–100) |

| Varicela in an adult | 78.6 (71.4–89.3) | 78.6 (71.4–85.7) | 48.8 (31.4–64.3) | 21.4 (0–100) | 88.6 (78.6–96.4) |

| Mean score | 71.1 | 62.9 | 63.0 | 51.8 | 93.1 |

NA, not applicable.

The exam was performed in one afternoon and took four hours and a half, as it was previously planned, and no incidences were reported during it. Students’ marks showed an improvement between pre-briefing test and de-briefing test (mean score of 45.5 and 66.4, respectively). Student feedback of the OSCE was very positive and indicated that the initial design of the exam was appropriate. They appreciated the opportunity to perform a medical visit alone for the first time in a safe simulated scenario. Examiners’ general evaluation of the exam was also positive, but they complained about the duration of the exam. All participants would repeat the experience if necessary.

DiscussionThe OSCE is a reliable approach to assess basic clinical skills and is becoming more used in medical faculties in Spain and other countries. This method evaluates the third level of Miller's pyramid in which students show how they perform these skills.14 It has demonstrated a good correlation between its scores and students’ grades on theory tests,15 it is a useful way to assess surgical skills16 and it creates a good scenario to standardize student–patient interaction. All these characteristics make OSCE a perfect assessment method to evaluate students’ skills in dermatology.

One disadvantage of our specialty is the need to perform a clinical examination of cutaneous lesions in order to reach a correct diagnosis. However, dermatology is much more than simple “visual diagnosis” and an integrated clinical approach is still necessary in everyday clinical practice. This allowed us to build complex clinical scenarios where students could show their abilities in different areas, including clinical examination that was preserved thanks to digital high-quality pictures in tablets and making-up actors in some scenarios (like heliotrope rash on eyelids in dermatomyositis).

A very important aspect to stress is that clinical management of every included dermatoses was rather deficient with low general scores (median of 58.0). We found some interesting data in different stations. For instance, in the “dermatomyositis station” all the students performed a comprehensive anamnesis, but only one student solicited tumor markers; and the “secondary syphilis station” was passed by all students, but only three students asked about drugs consumed before to the apparition of the rash. Even in the “varicella station” only 6 students were able to provide a correct answer regarding prevention of the spread of the infection. From our point of view these results reflect the gap between theoretical knowledge and clinical practice among medical students that must be overcome with better observational programs in medical degrees.

In our OSCE, students demonstrated excellent interpersonal skills, such as empathy and oral communication with the patient. In Spain, female gender, advanced courses, personal experiences close to illnesses and students involved in voluntary activities are variables that are related to empathy in medical students.17 However, we did not detect differences of empathy between genders in our OSCE, that is probably because we included only students from the last year of medical degree and empathy is a skill that may be developed by practise.18 In addition, we did not use validated methods to study empathy among our students as other studies do,17,18 but a validated checklist to evaluate interpersonal skills in an OSCE.

Assessment in dermatology plays a role in helping teachers and students to identify their mistakes and needs in clinical skills. Medical students begin their clinical training with the objective of learning how to apply basic rule-based formulas in specific situations.19 This implies that most of the questions in anamnesis are asked as a part of a “checklist” without a conscious intention of getting relevant information. We could observe correct structuring in students’ methodology to do anamnesis, but some difficulties in arriving to a specific diagnosis. This simulated experiences raise awareness of the “need to know” and assign to OSCE an important role in learning process.20,21 Although a multiple choice test is not the best way to evaluate the physician performance, we could document an improvement in theoretical knowledge in the multiple choice test. This indicates a potential role of simulated clinical encounters in linking the gap between dermatological theoretical knowledge and its application.

A topic to be considered is the difficulty of training medical staff instead of professional actors to perform each station in the OSCE, since it requires long time to coach them properly.19 Furthermore, in order to test as many students as possible it becomes necessary to engage a large amount of manpower. For instance, 9 people were required to examine only a group of 30 students in one whole afternoon (4h and a half). However, all students, actors and examiners were satisfied at the end of the OSCE and we are able to report the first experience with a full OSCE of dermatology.

Limitations of our study must be noted. Generalization of results may be hindered due to the voluntary nature of the exam and the lack of a bigger sample size of students. Furthermore, correct answers to the questions of the exam were fixed and thus any input from the students that did not fit in the pre-arranged correct answers was not evaluated, which is an intrinsic characteristic of the OSCE. However, the study achieved the main objective that was to overcome the technical barrier to perform an OSCE in dermatology.

In conclusion, despite the difficulties to develop an OSCE, it is an excellent method for assessing clinical skills in dermatology and a good way to evaluate undergraduate medical students. Students’ performance in our OSCE enhances that medical school curriculum needs to increase the importance to clinical practice in dermatology so that students can recognize the importance of obtaining a correct dermatologic history, physical examination skills and basic management of common skin disorders. We hope our experience will encourage other centers to establish OSCE in their Dermatology Program.

Ethical disclosuresProtection of human and animal subjectsThe authors declare that no experiments were performed on humans or animals for this study.

Confidentiality of dataThe authors declare that no patient data appear in this article.

Right to privacy and informed consentThe authors declare that no patient data appear in this article.

Conflict of interestsThe authors declare no conflict of interests.

We thank the Teaching Support Center (Centro de Apoyo a la Docencia) of University of Alcala for the technical support, and we also thank all the dermatology residents from Hospital Ramón y Cajal for their help during the OSCE.